Ollama

Run LLMs locally with Ollama’s simple app for macOS, Windows, and Linux. Chat with models, process files, and enjoy privacy with no cloud dependencies.

Ollama is a versatile AI interface that enables users to run large language models (LLMs) locally on their macOS, Windows, or Linux machines. Developed by the Ollama team, it provides a native GUI for easier model management and interaction. The app emphasizes privacy, speed, and usability, allowing users to download, manage, and interact with various models without relying on cloud services.

Key Features

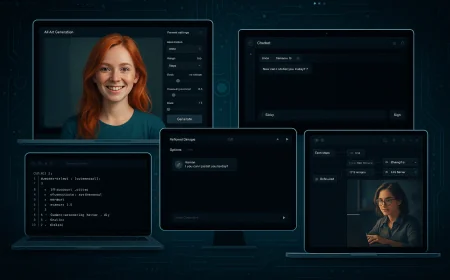

- Run LLMs locally with a native GUI

- Drag and drop files for text processing

- Multimodal support including image input

- Chat with AI models using simple interface

- Pull and switch between multiple LLMs

- Customizable context length for documents

- Enhanced privacy with local AI use

- Supports command-line for advanced users

Download Ollama

Download the latest version of Ollama for free using the direct links below. You’ll find app store listings and, where available, installer files for macOS, Windows. All files come from official sources, are original, unmodified, and safe to use.

Last updated on: 2 November 2025. Version: 0.12.9.

- Download Ollama 0.12.9 exe (1,12 GB) [Windows 10+]

- Download Ollama 0.12.9 dmg (46,13 MB) [macOS 14+]

What's new in this version

- Fixed performance regression on CPU-only systems

Installation

Download files are available in different formats depending on your operating system. Make sure to follow the appropriate installation guide: APK and XAPK for Android, EXE for Windows, DMG for macOS.

Linux users can install Ollama with a single command in the terminal: curl -fsSL https://ollama.com/install.sh | sh

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0