OpenAI Introduces o3 and o4-mini — Models That Think Longer and Deeper

The new o3 and o4-mini models from OpenAI excel at complex reasoning, tool use, and multimodal analysis — now available in ChatGPT and via API.

OpenAI has introduced two new models, o3 and o4-mini — an upgrade to the "o" series focused on complex reasoning and multi-step tasks. For the first time, both models can fully use all ChatGPT tools — from web browsing and Python to file analysis, image understanding, and graphic generation. This significantly enhances their ability to solve tasks involving data synthesis and multi-source reasoning.

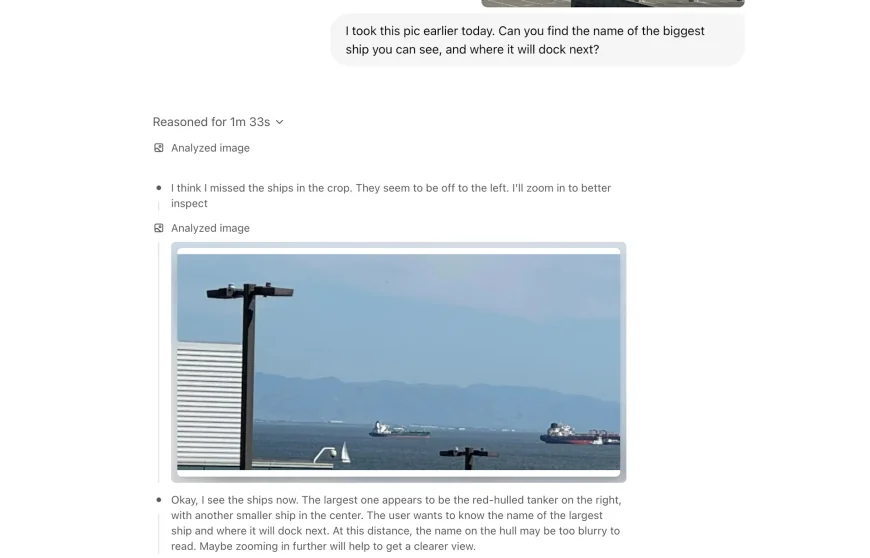

o3 is the most powerful model OpenAI has ever released. It sets new benchmarks in programming, mathematics, visual understanding, and natural sciences. On the GPQA (PhD-level STEM questions), o3 achieved 26.6% accuracy — compared to 8.1% by the previous o1 model. It’s the first model that can truly "think through images," analyzing photos, diagrams, and graphs at an unprecedented level. On the MMMU test (university-level multimodal challenges), o3 scored 86.8% accuracy.

o4-mini is smaller and more cost-efficient, but still highly capable. It topped the AIME math tests for both 2024 and 2025, with over 92% accuracy. Thanks to its low compute requirements, o4-mini is ideal for high-volume tasks. For the first time, these models aren’t just trained to use tools — they can now decide when and how to use them effectively.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0