Ollama launches official app for Mac and Windows

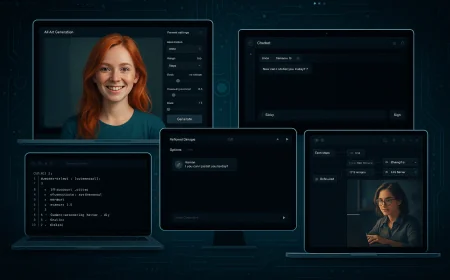

The new app makes it easy to run language models without the cloud or complex setup. The interface is stripped down and accessible.

The developers of Ollama have released an official graphical interface for MacOS and Windows, significantly simplifying the process of running large language models (LLMs) on a local machine. Previously, the tool worked only via the command line, and users had to rely on third-party GUIs like Msty for a more convenient experience.

The new app, also called Ollama, allows users to choose and download popular models — such as Gemma, DeepSeek, or Qwen — directly from a drop-down list. Once downloaded, the selected model appears next to the input field. Other models can still be pulled using the terminal with the command ollama pull.

Running LLMs locally ensures higher data privacy and reduces energy consumption. It keeps all computation on your device, avoiding third-party cloud processing. However, smooth operation requires modern hardware — older machines may struggle.

The app works faster than most third-party tools and features a clean, user-friendly interface. It integrates well with the MacOS environment, and its minimalist design makes interacting with AI simple and efficient. The app is currently available only for MacOS and Windows.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0